Update 2024: We now use Apache Kvrocks because ideawu has vanished leaving SSDB unmaintaineed.

Redis is an in-memory database with very high write and read speed, and a limitation that data sets can’t be larger than available RAM. It’s like memcached but supports data structures instead of just strings as values. Redis is great for caching lookups to AWS S3 from an external server, which can speed up your S3 reads and save you money on Outgoing Data Transfer costs. However, having your entire data set limited to the size of available RAM on the machine means you can only cache a small fraction of your possible AWS S3 keys. Also with Redis, if snapshotting ever fails because you’ve used more RAM than available, your snapshot will fail and force your Redis instance into read-only mode. This means your production Redis database might suddenly stop allowing writes until you restart it, yikes!😱

Redis Cluster

Right now, you might be thinking of a solution… shard the data across a multi-machine Redis Cluster. That increases your max OPS, but also has some downsides:

- more machines to maintain, trading one problem for another

- RAM is expensive, costs add up fast

Caching S3 with Redis

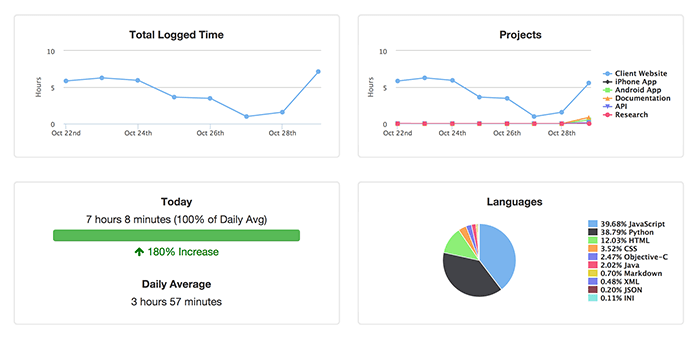

WakaTime uses S3 as a database for user code stats, because hosting for traditional relational databases with data sets in the terabytes is expensive. Not to mention traditional relational databases don’t have the reliability, resiliency, scalability, and serverless features of S3.

WakaTime hosts programming dashboards on DigitalOcean servers. That’s because DigitalOcean servers come with dedicated attached SSDs, and overall give you much more compute bang for the buck than EC2. DigitalOcean’s S3 compatible object storage service (Spaces) is very inexpensive, but we couldn’t use DigitalOcean Spaces for our primary database because it’s much slower than S3.

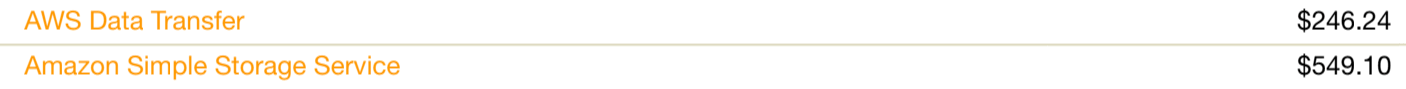

Transferring data from S3 to DigitalOcean servers costs $0.09 per GB, because Amazon charges a higher price for outgoing data transfers vs internal transfers. Our monthly data transfer costs were $246. Also, S3 charges separately for every read/write/list operation performed. The request operations portion of our S3 bill was $549/mo. Using S3 as a database was becoming a significant portion of our monthly AWS bill.

Data storage in S3 is cheap, it’s the transfer and operations that get expensive. Also, S3 comes with built-in replication and reliability so we want to keep using S3 as our database. To reduce costs and improve performance, we tried caching S3 reads with Redis. However, with the cache size limited by RAM it barely made a dent in our reads from S3. The amount of RAM we would need to cache multiple terabytes of S3 data would easily cost more than our total AWS bill. Instead, we decided to try several disk-based Redis alternatives and found one that fit our needs perfectly.

SSDB - A Redis alternative

We’ve been using a disk-backed Redis alternative in production for over 3 years now called SSDB. SSDB is a drop-in replacement for Redis, so you don’t need to change any client libraries. It uses LevelDB (a key-value storage library by Google) behind the scenes to achieve performance comparable to Redis. According to benchmarks, writes to SSDB are slightly slower but reads are actually faster than Redis!😲

SSDB supports replication built-in and clustering with twemproxy.

Most importantly, it stores your data set on disk using RAM for caching.

Our SSDB data set is 500GB and does a good job of lowering the number of reads we make to S3.

Remember to increase the file-max of your SSDB server to at least 10k, to prevent getting errors like Too many open files or Connection reset by peer.

Also, remember to periodically trigger garbage collection on your SSDB data files by running ssdb-cli compact with this crontab:

newline=$'\n' && ssdb-cli <<< "compact${newline}q"

Or else you’ll see your SSDB disk usage grow forever even after deleting keys.

Conclusion

Using SSDB to cache S3 has significantly reduced the outbound data transfer portion of our AWS bill! 🎉 With performance comparable to Redis and using the disk to bypass Redis’s RAM limitation, SSDB is a powerful tool for lean startups.

If you liked this post, browse similar writeups using the devops tag. To get started with your free code time insights today, install the WakaTime plugin for your IDE.

Alan Hamlett

Alan Hamlett